Krylov subspace

From Wikipedia, the free encyclopedia

In

linear algebra, the order-

r Krylov subspace generated by an

n-by-

n matrix

A and a vector

b of dimension

n is the

linear subspacespanned by the images of

b under the first

r powers of

A (starting from

A0 = I), that is,

-

It is named after Russian applied mathematician and naval engineer

Alexei Krylov, who published a paper on this issue in 1931.

[1]

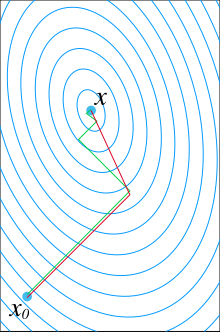

Modern

iterative methods for finding one (or a few) eigenvalues of large

sparse matrices or solving large systems of linear equations avoid matrix-matrix operations, but rather multiply vectors by the matrix and work with the resulting vectors. Starting with a vector,

b, one computes

Ab, then one multiplies that vector by

A to find

A2b and so on. All algorithms that work this way are referred to as Krylov subspace methods; they are among the most successful methods currently available in numerical linear algebra.

The best known Krylov subspace methods are the

Arnoldi,

Lanczos,

Conjugate gradient,

GMRES (generalized minimum residual),

BiCGSTAB (biconjugate gradient stabilized), QMR (quasi minimal residual), TFQMR (transpose-free QMR), and MINRES (minimal residual) methods.

References